How did we get here?

The background to filtering web access sort of looks like this:

Schools get internet access – children access porn – internet blocked in schools.

That can’t be right?

Let’s try again

Schools get internet access – children access anything other than the sites they were supposed to – internet blocked to schools.

..Still not right

Let’s try

School gets internet access – paedophiles groom school children – internet blocked in schools

Nope. Let’s throw a ringer into the mix

Schools get internet access – teachers access inappropriate (and illegal) content – internet blocked in schools.

Surely that can’t be right?

So what’s with this paranoia about the need for filtering (It can’t just be Ofsted safeguarding policy and procedures)?

Okay. Here’s what I know – based on my local, regional and national experience..

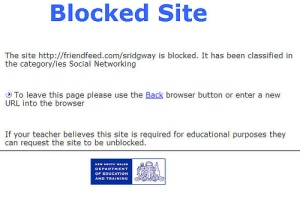

Anyone who has found themselves staring at a computer screen containing the message; ‘You do not have access rights to this page’ is familiar with the sense of frustration, sometimes anger and even humiliation that stems from the implied fact that they are apparently not to be trusted to use web access responsibly.

These access and trust issues are not a new phenomena though. As a teacher I had endless battles with our Head of ICT who locked down computers to such an extent that there was rarely a lesson taught by me that didn’t involve at least half the class complaining they couldn’t complete a task because they ‘were not allowed to’ or had ‘performed an illegal operation’.

Later, as a member of an LA advisory team I would observe teachers and learners unable to save their work and access resources because of restrictive admin policies -set by the school’s technical network people. It was also at this time, in the late 90’s that I began to experience the acute frustration of web filtering and ill-thought out ‘walled gardens.’

This week there was a conversation on Twitter that caused me some concern. A teacher posted a comment stating that an RBC was blocking some web based services across an entire region. Now, as I work for an RBC this wasn’t good news and I quickly offered to help – surely it couldn’t be true? – and it most definitely wasn’t my RBC.

RBCs (Regional Broadband Consortia) are not all the same and have differing business models, areas of activity and interest. I work for Northern Grid, a not for profit business owned solely by its partner local authorities. In very simple terms; we do what the LAs and schools ask us to do.

We don’t filter in schools – though we do provide filters to be customised at a local level.

We procure, manage and deliver broadband to schools. We filter illegal content at source so theoretically, no illegal content is accessible via our network. It has to be that way. There is no case for knowingly allowing illegal content (weapons, race hate, child exploitation etc) to be available to any user of our services.

Inappropriate content however, is an entirely different challenge and we believe that this needs to be identified at a local level.

In our partner local authorities almost all schools have local control over the content available via our network. There are some instances where (especially primary) schools are happy for filtering to be managed centrally by the LA, with the opportunity to customise their access when they identify a need. Unlike many primaries who may only have technical support for one or two days per week, secondaries tend to have an on-site team of technical support and theoretically are able to adjust access in real time. In practice this appears to be a point of frustration in some schools.

So, back to that RBC and the regional blocking. Well it transpired that the information was inaccurate and E2bn (the RBC mentioned) are not filtering across a region, and similar to our area, most of their LAs have their own filtering solutions. The reason there is so much confusion at present is due to Google’s proposed secure services. If Google’s https secure service is accessed by schools this will enable all users to access the internet unfiltered regardless of who put the filter rules in place. I cannot see how it would be acceptable to block existing google apps and docs when many schools are currently using these with colleagues and pupils.

That’s that. I’m not going to speak on behalf of E2bn – it’s not my patch. If it’s your area then I urge you to make contact with them and start the dialogue. (Why not attend their conference in June where you will see at first hand the valuable role they play in supporting their schools and LAs?) What is clear though, is there must be direct and responsive communication channels between whoever is in control of the filtering, whether it’s Tim the school-based technician/network manager, the corporate IT team in an LA, or a 3rd party commercial company, and the end users – typically the teachers in schools. Time is of the essence.

The process of making appropriate web services and sites available to teachers and learners must ensure timely effective teaching and learning can take place.

Why would unfiltered access be so bad?

Now that is an excellent question ..

I’ve been delivering workshops, giving talks, having meetings with schools around e safety for years. Mostly it’s treated with the same interest as all the other ‘do-gooder’ initiatives – until I mention liability.

The headteacher is personally liable for the safety of children in their care. So too is the director of children’s services. “personally’ liable means, in very simple terms; they can be sued for every personal penny they have.

You may now be sitting up and staring a little closer at your computer screen as that little nugget sinks in. It has prompted quite indignant responses from head teachers; ‘How can you hold me responsible for stuff that happens on the internet?’ Well, yes, quite. It gets even more interesting than that. It’s actually the governing body who’s responsible, but as the budget is usually devolved to the headteacher, it’s most likely they’ll take the rap when ‘that bad thing’ happens to your pupil or colleague.

So, when you see the accountability trail it is easier to understand how Heads and LAs look to consistent filtering to protect the children, the reputation of the institution – and the jobs of our colleagues.

If only it were that simple.

This is really all a question of culture, the racing pace of technology and behaviours of children and adults.

So, where the school does have autonomy of filtering, it’s not in itself a total solution. Too many filtering systems are in the hands of people who appear to think that filtering is there to make their job easier. If you lock down internet access then there is less chance of extra work in applying plug-ins, programs, local installs etc. – and opportunities for effective and exciting learning are the casualties. Those who control the filters must understand the pedagogy.

To debate whether it should be the school, LA or other organisation in charge of filtering is to underestimate the nature of the challenge. With BSF we see remote services as a matter of course. I don’t care whether the guy who makes the changes is down the corridor in a cupboard, or in a multinational’s office the other end of the country. The time to make the policy changes should be the same.

Filtering must reflect the needs and culture of the school.

Let’s recap on some reasons to filter:

- Protect children from strangers.

Yes, the world is a dangerous place – There are hundreds of thousands of prisons across our world full of millions of people who have done ‘bad things’.

The chances of a child being groomed by a paedophile, on your systems are remote. They may also be groomed for race hate, terrorist, criminal activities – or even bullied and intimidated. The thing is; when that bad thing happens, you, your school, LA and director of children’s services will all be held accountable.

- Protect children from content

The web has enabled each of us to publish and share in a way undreamt of 20 yrs ago and this opportunity has also led to the most vile, horrific and disturbing content to be available to users of all ages. Schools have a moral responsibility to try to ensure such content is not available to be accessed either accidentally or deliberately by any of their learning community.

- Protect adults

Sigh. If I hadn’t seen it with my own eyes and heard it with my own ears I would struggle to believe the amount of inappropriate and sometimes illegal online activity on school devices and internet connections. Whether it’s downloading commercial porn, uploading porn featuring themselves, criminal activity, race hatred or making money via ebay, these things are happening across the education sector from nursery to 6th form college (I can’t speak for further and higher ed).

- Protect systems

The tenacity of malware distributors knows no bounds – attaching files to anything that’s topical from X Factor to world cup football. If it’s popular then you can be sure someone will share a ‘bad thing’ to infect your systems and disrupt and prevent learning for hours (if you are lucky), days or even weeks.

- Make effective use of time

There are some kids, classes and schools where internet access means messing about and wasting time. The anti work culture is so entrenched that each web opportunity becomes a point of disruption and frustration. Children will commit hours of time off task, sending messages, looking at pictures, playing games etc. It is understandable that some schools pretty much ‘block everything’ – just to maintain some order in class. This strategy doesn’t address the cause of the learners’ disaffection – but deals with the symptoms, for now.

1500 words later what am I saying?

The problem isn’t about filtering, it’s about behavior. We need to get to a place where;

Children know how to recognise ‘stranger danger’

Adults and children know and share an understanding of appropriate online behavior

Pastoral systems in schools adequately deal with those whose behaviours are unacceptable

Effective system protection solutions are in place

By integrating e-safety teaching across the curriculum we can provide children with the skills to help ensure they are not vulnerable online

By ensuring that all members of the learning community are involved in the development of acceptable use policies, teachers and learners will be self censoring in their use of web and communication systems.

Until schools have identified procedures for dealing with inappropriate and accidental online activity by adults and children there will not be a consistent message to users, parents and members of the wider community

Ultimately, one of my key messages to schools is always; ‘we must be seen to be managing the risks to the best of our ability. We cannot eliminate risk entirely’ The challenge is to be able to stand up and say, as a school, when ‘the bad thing’ happens, ‘we did everything that could reasonably be expected of us to protect our children and adults’ It is by achieving this that, we as teachers, schools and LAs will

- Protect our children

- Protect our colleagues

- Protect the reputation of our school

– and finally, filtering will be responsive – and invisible to those who are committed to safe, creative and effective learning and teaching.

It is a problem in schools, I do realise that, but I have often found that the kids will access whatever they like on their smart phones anyway. It may be that a good protection is in place on the school network, but many kids soon learnt how to hack into them. The teachers didn’t.

Now a lot of the youngsters have found a workaround. Time for adults to share the knowledge FROM the kids. They are often more savvy than the elders. Education is key, the internet is only an extension of real life, full of good and lots of bad lurking away. One lesson per month/week could be classes just talking about IT, learning from each others experiences. Honest and open dialogue. Not techie. It would benefit a lot of teachers as well as the children.

chris

Thank you so much for taking time to document tnis so clearly and non-technically. I shall be passing the link to your post to my SMT who do want to assess the risks and yet go ahead and develop new media use across the curriculun

I couldn’t agree more Simon. The reaction to emotive words is as you say (grooming etc). I am currently looking at setting up our websense system to be more open. Particular categories of focus are: forums, message boards, blogs, third party storage (e.g. Google Apps, dropbox).

The system I am thinking of is to have a different filtering criteria for each key stage and a white list level for imposing on those pupils who abuse the web. This will hopefully help bring the conversation to the classroom of responsibility for what you click on. Therefore, beyond the obvious illegal and inappropriate content, making it a matter of behaviour rather than blocking.

Hey Dai – very happy to help/discuss/share ideas if you think it useful. Would be good to meet up again some time :-))

It would be good! I will hopefully finalise the way forward this term. A big concern for me is who is going to do what. If there are filtering decisions to be made it should be by mutual agreement of key stakeholders, or representatives thereof. After the system is set, it will need careful monitoring. Shouldn’t be too hard…but would welcome a chat about the nitty gritty when we get closer.

Good piece, thanks for writing it. My own (much shorter) response to the news of our RBC blocking Google Apps is on my blog here: http://bit.ly/deZ5Nc

One point from the end of your piece, and that line about being able to say that ‘we did everything that could reasonably be expected of us to protect our children and adults’. At what point does the reasonable protection of children and adults interfere with the reasonable provision of learning and teaching resources for those children and adults? Not sure I have the answer, and it’s an intrinsically subjective issue, but worth asking, no?

Thanks for your comment and you are correct in that managing risks/litigation/liability are areas that need to be considered rationally and sensitively.

Schools in SWGL can customise their filtering policies – here is the information they need-

‘Schools can request to have https://www.google.com unblocked by

a.) Visiting the web page at http://googlessl.swgfl.org.uk and completing the form

or if having difficulties by:

b.) Calling the SWGfL help desk 0845 3077870

c.) Or your Local Authority support’

Solution. School policy in place to make sure school is protected from litigation. Education of parents. Education of teachers. Listen to the kids. I mean really listen. Many of them know more than you think. I agree, this has to be done by behaviour not blocking or filtering. Once everyone is aware of the dangers then they can harvest all the fantastic stuff out there. The next generation is growing up digital already. It is the last generation that needs help to catch up in order to protect the youngsters.

Excellent post Simon – a fresh take on what is being made into a perennial problem.

This awareness of digital footprints, appropriate use of resources and the advent of digital citizenship is a focus for our school next year, 12 years since our school’s initial connection to the world wide web.

12 years of blocking and filtering that has created more problems and frustrations than it solved. 12 years without much consideration for missed learning opportunities.

Time to take off the trainer wheels and teach the road rules.

This wiki by Andrew Churches offers interesting considerations for a school policy/programme. http://edorigami.wikispaces.com/The+Digital+Citizen

Absolutely agree! As @mattlovegrove said to me ages ago, ‘we don’t stop children playing at swimming pools just because they are dangerous…we TEACH them to swim.’

We need to TEACH online safety not create some false cotton wool society where children then don’t know how to respond and/or look for help.

I worked at a council for a while for an outsourcing contractor. They were a DC so not quite at the level of having schools to look after. The staff weren’t even allowed to do ANY internet shopping (even if they input their own payment details) in case the council was made liable for the order? Eh? It actually meant that I would be breaking their internet access policy just to do my job as I had to order (legitimately) office stationery and equipment. I had to have my own internet connection which was ridiculous really.

Nothing is straightforward in this issue but teaching awareness is, I believe, far better than blocking access to everything. I initially agreed with Internal Audit that we would have no specific site filtering. (Although this was before Facebook!) We had no worse a problem with inappropriate use of the internet then than they did once they had a sophisticated (for which read draconian) filtering system and it took about 3 weeks to get a legitimate site unblocked. I think they had a worse problem, because people felt they were being treated like children so acted like them. I’m sure there is a parallel here between this situation and the ones you describe. Your thoughts are exactly how I have always tried to run things as an IT manager but unfortunately, the risk-averse in SMTs want all bases covered.

All I can say is that Simon should be king of school internet filtering. If only this kind of approach and understanding was actually what is being applied at LA/school level.

I too stand in computer rooms having prepared and set lessons for students to access sites and continue their learning only to find them blocked (and these are 16 to 19), and the situation cannot be solved quickly. The frustration for staff is that they can no longer set the agenda in the classroom and yet they are held accountable for the success of their students. I understand the risks and do take great care to ensure e-safety and yet I have no authority to see it through, our lessons are controlled by IT who, with the best will in the world, are not familiar with what should be the content of my lessons. That being said, a great many staff avoid the issue by avoiding using Ed-tech which is a problem for the future of our students and of Ed-tech.

About 5 years ago in conjunction with an IT company we set up the Internet security for a private boarding (and day) school. I should point out that our company specialises in Information Assurance/Information Security from a design/consulting stance (we don’t sell boxes or product). Each child (and staff) had their own network ID and password and each was was assigned to a specific group, e.g. grade 1, grade 2, teacher, finance, school nurse, administration etc. This meant that the filtering policy could be set specific to each group e.g. only the School Nurse needed access to sites dealing with drugs and Grade 6 could access more than Grade 1. Time was also included in the equation so that Teachers were given access online banking but not during core teaching time and pupil access was limited to school hours (i.e. when supervision was available). All the filtering policies were developed in association with the school (Head, senior teachers, Nurse, Admin etc) and monitord and tweeked over time. All that can be said is that it all worked very well.

So much to say in reply to this. First these are my views and not necessarily those of E2BN itself.

Second: the good news is that Google appear to have realized they made a mistake with the way their Secure Search was implemented – I’ve just had an email from then directing me to this:

http://googleenterprise.blogspot.com/2010/06/update-on-encrypted-web-search-in.html

I agree with much of what Simon says but not all. I’ll not go into too much detail (my typing’s not up to it!) but as far as I am aware all RBC are not for profit businesses (E2BN certainly is).

The E2BN filtering system – http://protex.e2bn.org – is a hierarchical system allowing filtering at both LA and school level with the lower able to override the higher. Schools can buy into a local filtering server and control their own level or use the service provided at LA level. This is their choice.

LAs can, if they so choose, override the general RBC filter policy – for example to allow a site the E2BN blocks or block one we allow.

There are a range of age related filters that can be applied to users and, in particular, the staff profile has much greater access than the student ones.

On every block page there is the opportunity to comment on the site being blocked and request it is unfiltered – anyone can send it, staff and pupils. These are processed every day and if uncontroversial (which most are) actioned within 24hrs.

To diabarnes: this is what Protex does – why not take a look a the Protex site and then start a conversation with us? (You don’t need to one of E2BNs schools).

to edd35: does that extend to having Pornographic books in the Library and teaching pupils not to read them? If not, why not? What is the difference?

[…] […]

1. What an interesting and useful post.

2. When are the schools going to wake up and block the endemic “user profiling” that takes place on the net? They are constantly being tracked by the likes of Audience Science, Omniture, Comscore, Yieldmanager, Doubleclick, and hundereds of other advertising companies that consigning the childrens browsing habits into data warehouses that might have implications on their rights to privacy in their future adult lives.

[…] Blog-posting about internet filtering in schools – the background to why and how web filtering happens in schools, from a school, local authority and national perspective. […]